Decentralized Data Storage & Delivery

Extant technologies, products, issues and possibilities…

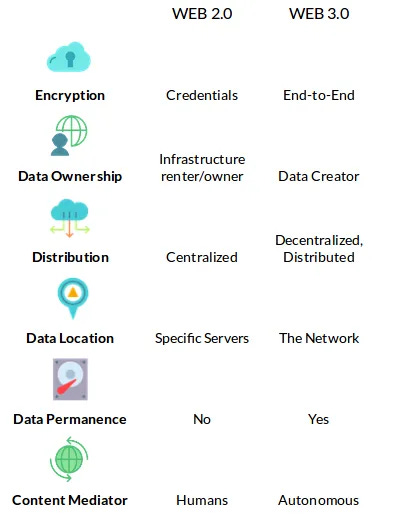

Is decentralized data storage and delivery—an essential Web 3.0 technology—ready for prime-time? Spoiler alert, no.

Might be plausible but current products do not even seem to be headed in remotely the correct trajectory.

Warning: ‘I am AnonyMint’ with a reputation of accumulating the ire of just about every crypto project I felt bothered enough to waste my time analyzing, due to my pointing out numerous and often baked into the design invariants (i.e. thus insoluble) egregious flaws. This blog will carry on that tradition.

“IOTA is entirely centralized and requires a Coordinator they refuse to remove and let it run decentralized because they know their consensus technology is a (fabulous technobabble) lie.” — AnonyMint

Blockchain Randomness

Elusive reliable, non-co-opted, public[14]¹ random number generation (RNG) is required for enabling some capabilities and security protocols on blockchain, including any blockchain enforced “proof of replicated storage.” For example, the developer of Bitcoinj Mike Hearn discovered in 2013 that obscure bugs in Java’s RNG on Android rendered all crypto wallets insecure. Lack of trustless RNG can imperil not just cryptocurrency but even smart contracts, fairness of voting, and many other capabilities that impact the viability of Web 3.0.

As contrasted with mathematical measures of entropy which are not measured relative to systemic outcomes, cryptographic entropy, i.e. “uncertainty an attacker faces to predict the randomness,” can’t be created ex nihilo by a cryptographic function alone — not without some mechanism¹ which proves verifiable bounds† on the ability of an attacker to bias the created randomness. Cryptographic entropy aka min-entropy is unpredictable data that verifiably bound the† attacker to the parameters of a cryptographic protocol or in some cases provide statistical outcomes the attacker can’t predict with any higher probability than the uniform distribution (i.e. flipping a fair coin, rolling the uniform fair dice, etc).

Is an appropriate orthogonal randomness taxonomy: secret vs. public, trustless vs. trusted and absolutely vs. contextually bias-resistant? Transparency is required for verification of trustless and/or mode of bias resistance but doesn’t necessarily provide either. Trustless and separately absolutely unbiased are always in the real world some reasonable assumption of an approximation, e.g. substitute a hash function for a RO. Trusted by definition is never verifiable. Contextual bias resistance (e.g. zk-STARK) is a transparent, trustless (e.g. in ROM) verifiably-bounded result of the cryptographic protocol. Whereas, absolute bias resistance is an attempt to extract entropy from uncontrollable, unpredictable phenomena (e.g. radioactive decay), most often in form of trusted, secret randomness for private keys. Bitcoin as trustless-approximate, provides a contextual public randomness beacon verifiably-bounded only up to the opportunity cost of replacing block solutions and other caveats (e.g. orphan rate) of its protocol.

Proof-of-work has native randomness (aka minimum entropy) up to (or equal if no attack) the bits of mining difficulty[11]² but only presuming a negligible rate of orphaned block solutions and an unprofitable cost for an attacker to discard block solutions to bias the randomness. It logically follows that the entropy or security thereof of said randomness increases with the advance of Moore’s law but declines with declining block revenue and even worse as[14] transaction fees increase as a fraction of mining revenue. Proof-of-work is apparently the only verifiably-bounded, trustless[14] (aka transparent) cryptographic entropy (aka unbiased randomness) beacon ever conceived.

Asymmetric verification time,[14] iterated verifable delay functions (VDF)[7]³ (c.f. also[8]) are proposed[11] so a proof-of-work RNG can’t compute its bias before the (reasonably probable reorganization finality) countdown for including the random value in the block. To prevent biasing dependencies on the (to be) generated final output random value, said dependencies must be committed before VDF completes, yet this hen-egg dilemma is incongruous with validation of said dependencies’ (e.g. transactions’) correctness—in that said validation depends on the VDF output randomness—required for proposing the block solution that’s the input of the VDF. Additionally, the security for an efficient, production ready VDF with proof-of-delay is an unresolved research topic. An alternative VDF approach is a refereed delegation model[11] in lieu of an asymmetric proof-of-delay.

Vitalik’s VDF attacker is perhaps only credible to the extent of needing to allow for perhaps a ‘small factor’ security buffer of perhaps one order-of-magnitude speedup in the targeted delay, because presumably such an ASIC is more profitable by selling many copies to the community than killing the ecosystem once. Whereas, a VDF’s asymmetric verification ratio is more critical to avert bombardment of slow and costly verification, than instead employing insidious trust (e.g. WoT) as the primary defense against such denial-of-service (DoS) attacks.

† Bounded means the prospective attacker is verifiably compartmentalized, constrained, limited in some transparent, trustless way—e.g. game theoretic economics in Nakamoto proof-of-work or informational degrees-of-freedom in zk-STARKs discussed below—such that the attacker can’t succeed by sufficiently biasing the outcomes. Transparent, trustless means necessary conditions are public knowledge, at least up to some presumed reasonable (trusted?) approximation such as for zk-STARKs the assumption that a hash function is an acceptable substitute for a random oracle (RO).

¹ ‘Reliable, non-co-opted and public’ are characteristics of any truly transparent, trustless, permissionless “system.” System instead of ‘mechanism,’ ‘algorithm’ or ‘protocol,’ because it executes autonomously and is holistic (not just a mechanistic software device) involving game theory, economic incentives and emergent order via chaotic participation/interaction.

² Which can be visualized as rolling dice a specified number of times. But currently insufficient bits of entropy for some of the aforelinked capabilities, although sufficient for blockchain enforced proof of replicated storage. And consequentially proof-of-work hashing algorithms such as Monero’s RandomX (which btw strives to be ASIC and GPU resistant and) which are orders-of-magnitude more costly to compute per output hash compared to Bitcoin’s SHA256, thus generate an order-of-magnitude or two fewer bits of entropy for the equivalent monetary mining difficulty. Blake as the proof-of-work hash function might increase entropy by ~4 bits compared to SHA256.

³ Tangentially, given that an unbounded number of VDF instances could be sampled in parallel, a VDF can only assist in obscuring temporal bias for a random beacon from inputs that due to some mechanism are prohibitively costly to replicate. Bitcoin proof-of-work system with its huge monetarily incentivized computation thus huge precomputation opportunity cost is barely practical for generating small amounts of said entropy.

Proof-of-stake doesn’t have any significant native cryptographic entropy other than some (probably insufficient) contributions of unpredictable, chaotic network asynchrony, which was apparently Nxt’s⁴ only RNG. Ethereum replaced the native randomness (of its former Ethash proof-of-work) with an accumulated groupwise (among all bonded validator’s) signatures entropy that is biasable even for validator sortition, and most precariously so if used for smart contract entropy. Furthermore ʀᴀɴᴅᴀᴏ can be entirely (and surreptitiously) hijacked by an attacker (such as the natural oligarchy and/or by hacking the secret keys) that controls ~50+% of the said validators.

Any non-proof-of-work form of public cryptographic entropy is opaquely dependent on trusting that the permissioned membership that generates the entropy doesn’t surreptitiously collude. Whereas, even an attack of a 50+% oligarchy of proof-of-work miners is verifiably-bounded to a fractional reduction of the aforementioned entropy if limited to a cost-free assumption, accomplished by forcing a non-surreptitious (i.e. fully visible aka transparent) decrease in the mining difficulty as the result of censoring all the block solutions of the non-complicit minority and (which is a cost reduction) by the attacking oligarchy decreasing its own hash rate to equal of that minority. Or otherwise bounded by the aforementioned (if prohibitive) opportunity cost. Note an individual miner’s comparative profit options are not transparent, but the opportunity costs of withholding block solutions.

⁴ Reminiscent of the 2013 Mastercoin era.

Liveness attack

Thus not surprising that injecting entropy, albeit this non-proof-of-work genre of a lambasted,† inferior trusted, permissioned, generated-groupwise form of randomness beacon [Dfinity aka Internet Computer], is employed [Ethereum 2.0] as a defense [Cardano] against proof-of-stake’s stake-grinding,[2] attack (to which Nxt was more vulnerable than the aforementioned, but less so than the first proof-of-stake blockchain Peercoin).

The Ethereum 2.0 technical handbook points to the 𝚗 - 𝚝 liveness vulnerability in Dfinity’s threshold signature approach. Yet commensurate with that document’s ostensibly overly optimistic stance towards the documented safety vulnerability in their aforementioned accumulation approach, they don’t acknowledge that Dfinity’s liveness-vulnerable random beacon design choice (c.f. also pg. 2) is a tradeoff for an opaque (i.e. surreptitiously violable, still not trustless, transparently verifiably-bounded) unbiasable entropy safety bound (i.e. number of colluding to) 𝚝 - 𝟷 member signatories because it’s an interactive form of a verified randomness function (VRF).⁵ Ethereum’s ~50+% complete takeover “safety bound” scenario by the natural oligarchy is lower than the 𝚝 of ⅔, but it has no formal (not even opaque) bound for being unbiasable. Yet seem rather pointless because doubtful to not always have at least one dishonest signatory out of ½ or ⅔ in the presence of the natural oligarchy. And even if so, the liveness would be tenuous and precarious — the only way to recover from a permanent liveness stall is a hard fork. However the centralized consortium (aka oligarchy) ostensibly (even if surreptitiously, undetectably) controlling each of these (thus centralized) blockchains will presumably prevent any liveness stall because they have more to gain by parasitizing in other ways.

Algorand’s validator sortition deployment of the same VRF as Chainlink enables the natural oligarchy to precompute the randomness in advance for every successive chain of blocks where their validators will be chosen as the block proposer. Which probably also enables complete takeover with some mechanism in their protocol which I do not want to expend the time to figure out.

Actually Ethereum 2.0 also has a liveness vulnerability equal to its aforementioned safety vulnerability because as diagrammed above the ~50+% oligarchy can control all the validator slots and the LMD fork choice rule, thus stall the blockchain if they wish. Thus the standard equation holds for Byzantine fault tolerant (BFT) consensus aka Byzantine agreement, 𝘭𝘪𝘷𝘦𝘯𝘦𝘴𝘴 = 𝟷 - 𝘴𝘢𝘧𝘦𝘵𝘺 with 𝘭𝘪𝘷𝘦𝘯𝘦𝘴𝘴 = 𝘴𝘢𝘧𝘦𝘵𝘺 = ½ (aka 50%) in Ethereum 2.0. And Ethereum’s aforementioned ʀᴀɴᴅᴀᴏ design—which appears to be a hack, an ethos of informality preferred by Vitalik—of accumulating randomness additively in a sortition system that involves incomplete formal game theory and math thus which may have unidentified exploits and vulnerabilities.

Large market-cap proof-of-work blockchains with their own unique ASIC-friendly (i.e. not RandomX) hash algorithm, aren’t vulnerable to a liveness attack other than a 50+% censorship attack.

Consequently ostensibly there’s no actual (only an opaque illusion of) trustworthy randomness in proof-of-stake systems and their analogs such as Filecoin. Because they lack proof-of-work’s transparent, trustless unforgeable costliness, verifiably-bounded native randomness. And their substitute of permissioned, membership threshold cryptography is subverted (analogous to the tradeoff between security and liveness in permissioned consensus groupings, such as any non-proof-of-work analog of proof-of-stake) if for example the assumed ⅓, ½ or ⅔ thresholds of non-malicious collusion are breached. Meaning the “randomness” could be (most likely is) surreptitiously (i.e. possibly even undetectably) deterministically biased — the antithesis of cryptographic entropy. These systems have more holes than Swiss cheese waiting with open legs for the more powerful entity behind the curtain to eventually wrest control from the parasitizing natural oligarchy to wreck scorched earth all over the altcoin, shitcoin promise land.

† Proof-of-stake in general is widely criticized by those expert enough to comprehend the issues fully and I have linked to some expert examples.

⁵ Paradoxically, noninteractive VRFs can’t be achieved with fully-noninteractive, zero-knowledge proofs (NIZKP) (at least not in IP) because of the hen-egg dilemma that such (at least in IP) NIZK require the unbiased public randomness that VRF exist to (but ostensibly don’t, at least not transparently) provide.🤯 However, proving the valid execution of such a randomizing function (e.g. a cryptographic hash function) in zero knowledge (of the secret seed or key) with any zk-SNARG should also work because the requisite randomness is either implicitly public in the random oracle model (for zk-STARK/PCP in NP) or generated interactively but only once with a trusted setup (for zk-SNARK/QSP) of the designated circuit’s reusable public parameters — with ongoing proofs of the circuit execution being noninteractive. Although both presumably less efficient than other formulations such as the VRF adopted by Chainlink (discussed in the next section) or the aforementioned threshold signature random beacons.

Tangentially it is interesting (to myself at least) to note from the cited foundational paper on noninteractive zero knowledge in the interactive case, that the ⅔ completeness and ⅓ soundness thresholds correspond conceptually to the ⅔ safety and ⅓ liveness thresholds in asynchronous (i.e. unbounded interactive timing) BFT protocols for Byzantine agreement in non-proof-of-work consensus protocols. The noninteractive case with its safe ‘parallel composition’ can drive these thresholds to 1 and 0 at the limit. Parallel composition ostensibly has a conceptual analog in bounded asynchronous BFT as well, in that by dividing up heterogeneously that which is accepted as the fault tolerant or consensus result, it’s possible to degrade consensus functionality heterogeneously with more than ⅓ faults.

Smart contracts

The following is notwithstanding the previously discussed opaque, validator sortition protocols for non-proof-of-work blockchains for which the aforementioned multiparty computation, threshold cryptography may be a dubious, yet practical solution[illusion] even (if ignoring/accepting the aforementioned issues of the natural oligarchy surreptitiously biasing the randomness).

Many of the aforementioned capabilities and security protocols that require randomness will be provided by smart contracts thus in some cases possibly necessitating more bits of randomness per block that the aforementioned (including proof-of-work’s) randomness beacons can provide. More crucially as aforementioned in the context of the VDF is the hen-egg dilemma for a random beacon that the smart contract dependencies must be committed before the randomness is known, yet the block assembler/proposer (aka a miner in proof-of-work) must validate the correct execution of the smart contract transactions before committing to the block which provides the randomness of the beacon.

Enter Chainlink’s VRF which enables smart contracts to commit to the randomness before the randomness is publicized. Unfortunately Chainlink’s misleading marketing does not conspicuously advertize its vulnerabilities, especially not for a layman:

The value 𝑦 is consequently unpredictable to an adversary that cannot predict 𝑥 or learn 𝚜𝚔

The “random” in “verifiable random function” means “entirely unpredictable (uniformly distributed) to anyone who doesn’t know the seed or secret key.”

That means if the Chainlink provider colludes with anyone such as a block proposer which can bias the “unpredictable block data” for the seed, thus also biases the resulting randomness by random sampling it as many times as they need to⁶ (aka precomputation) via said collusion before committing the data to the blockchain. The touted cryptographic proofs against tampering are thus impotent and inapplicable because when publicized they represent only a proof that the random function was correctly applied to the seed as publicly committed after any precomputation with other seeds.

Not clear to me whether Chainlink is employing multiple providers per randomness request. If Chainlink did implement this then the randomness would remain unbiased if at least one of the said providers was not colluding but any one provider could attack the liveness. And since each provider has to release publicly, one gets to release last and thus can bias the entire result. Providers seem to have an irresistible motivation to surreptitiously collude, because it’s extra income (via the shared profits of the biased randomness) without any repercussions.

Thus randomness in smart contracts is probably not trustworthy, unless if proof-of-work’s limited native randomness.

Vitalik ostensibly naively (and incorrectly) believes that Ethereum does not have a natural oligarchy thus not one of those projects in his words ‘where the community is nice but the project is just fundamentally incapable of achieving what it’s trying to do.’

History Tidbit: ironically in 2013 Charles Hoskinson before deciding to team up with Vitalik to create Ethereum, had privately asked my opinion on the viability of the project. I had analyzed Dagger (not Dagger-Hashimoto which didn’t exist yet) pointing out it wouldn’t be ASIC resistant. And I had suggested that Turing-complete smart contracts would be a can-of-worms introducing many seemingly intractable game theory and security issues, such as aforementioned biasing the randomness or reorganizing the blockchain (for that biasing and/or) to capture maximal (aka miner) extractable value (MEV) profit which exceeds the cost of the reorg.

⁶ Which ironically is analogous to stake-grinding in that being reminiscent of computation normally done in proof-of-work which has some cost depending on how many bits of entropy to be biased.

What Won’t Work

History Tidbit: my comment about Filecoin on Arthur Hayes aka Cryptohayes’ blog—which btw recounts and links to my history of being afaik the first to publicly propose proof-of-disk-space—prompted me to initiate this deeper dive.

Soft-forks: Vitalik clearly didn’t understand the game theory and finer details of the impostor Bitcoin’s 2017 “soft-fork.”

a soft-fork isn’t more coercive (Vitalik agrees†) nor does it force anyone to update their clients. Seesh the entire rationale for its “soft-fork” nomenclature is that supposedly users have the option to continuing using the legacy protocol. For example, to remain on the legacy protocol Bitcoin, then spend only to addresses that begin with

1, not the impostor Bitcoin protocol3orbc1.“soft-fork” doesn’t exist in any system that has a Nash equilibrium, i.e. that doesn’t devolve to trust (of the natural oligarchy) because the legacy protocol has an immutable perspective of the protocol and thus views the mutated protocol as

ANYONECANSPEND. Thus in Bitcoin any “soft-fork” is a hard-fork waiting to complete as soon as theUTXObooty ofANYONECANSPENDis sufficient to finance restoring the Nash equilibrium by the miners (who naturally have first dibs on) taking thoseANYONECANSPENDas donations for the restoration. Every fool who has stored their Bitcoin in addresses that begin with3orbc1will lose all their ₿ when the miners will“take it”[accept the generous donations] from naive users’ wallets without ever touching the private keys. Heck the looming impostor Bitcoin ETFs are even promising to lose all the looming institutional investors’ ₿ on their behalf even though the private keys will be perfectly safe.all the silly attempts at a counter-argument have been unequivocally and resoundingly refuted by Mircea Popescu and elaborated for n00bs by myself — myself ostensibly being inter alia the only (completely ignored) person to ever correctly explain why Satoshi employed double-hashing. (note: my aforelinked gist also archived here)

Anyone Can Spend “hack” by legacy miners will reappropriate ~17+ million BTC lacking private keys

How the Bitcoin legacy Anyone Can Spend Jan 1988 Economist Mag cover story Phoenix rises from the ashes of instant monetary reset

Arthur “Crypto” Hayes ostensibly does not realize he is describing how the Bitcoin ANYONECANSPEND event will destroy the extant financial system in literally the blink-of-an-eye.

† But hard-forks aren’t really coercive either as Vitalik claims, as you just sell the one you disagree with and buy the one you prefer. And unless the natural oligarchy has some parasitic “mine-the-users” reason to prefer the hard-fork, they would naturally prefer immutability if they care about the trustless store-of-value for their stack.

Proof-of-space Mining: Originally I had publicly (todo: search for the post) dismissed my aforementioned proposal—because my goal in 2013 was to replace proof-of-work with proof-of-space as the Schelling point for blockchain consensus—said dismissal ironically was the correct intuition, and especially if storing user data in said space, even though at the time my “insoluble Sybil attack” reasoning was flawed. Afair, I hadn’t applied the effort to think through how to defeat Sybil attacks with cryptographic proof-of-replication[1] — wherein otherwise a single, shared copy of stored data is presented as a deception of a plurality of entities masquerading as separate nodes due to different IP addresses or ‘virtual machines.’

My 2013 intuition remains correct though for several reasons — the first few (i.e. the user data case) fall under the umbrella of incentives incompatibility.

Freeloading: space miners could store only their own data.

Non-fungibility aka discordance-of-wants: not all stored data has the same value to the network.

Socialism: no responsibility market for the infinite limit cost of aged data retention.

Nothing-at-stake[2], subjective,[3][4] oligopolistic[5]: applies to any blockchain consensus which doesn’t burn a resource (not even itself such as its own Moore’s law amortization⁷) or which transfers the expense of doing so by doing useful work[6] to the parasitic wealth extraction of the inevitable oligarchy.

Implausible: analogous to stake-grinding, the only way to convert proof-of-replicated-space to the first-to-find, first-to-publish Schelling point (aka Focal point) required for a robust permissionless,[5] trustless, synchronized-wallclock-time-agnostic[3] blockchain⁸ is to essentially convert it to proof-of-work[6] such as some form of computational proof-of-work employing a VDF (essentially as the hash function, or at least a delay function with the low, asymmetrical verification cost of proof-of-work) layered on top of the non-interactive proofs-of-replicated-space as allowed nonces.

⁷ Although random-access, storage space does follow Moore’s law, the electrical consumption is negligible and even nil if not actively accessed. Thus amortization lifespan is that of the device not the much more accelerated electrical unprofitability threshold cliff of proof-of-work ASICs. Moreover, any otherwise unused excess space is approaching nearly nothing-at-stake — an example of depreciation expense transferred to useful work and additionally so if the storage is simultaneously rewarded for data retention and/or delivery.

⁸ Which all the nothing-at-stake systems such as proof-of-stake or any non-proof-of-work lack. And ironically perhaps the aforementioned Peercoin (and to a lesser extent Nxt)—which did not employ some form of the random beacon for validator sortition found in the more recent “improved” proof-of-stake variants—thus economically reverted to a form of proof-of-work as stake grinding. So the “improvement” ostensibly made it easier for the natural oligarchy. My mother quips that “upgrade” is an oxymoron.

System-wide, like-kinded rewards for data retention and/or delivery: ditto non-viable due to aforementioned freeloading, non-fungibility and socialism. Additionally there’s no objective mechanism to prove that data was or wasn’t delivered — a he said, she said, blame game[9] ambiguity dilemma. And as an aside generally note the issue of blame in asynchronous networks,[10] c.f. also ‘FLP85’ in page footnote‘¹’[3].

Thus the only solutions I can envision are those where users individually choose (and share reputational experience in a WoT on) which data providers they pay for retention and/or delivery. Proof-of-replication can be incorporated to squelch Sybil attacks on the list of providers users can choose from.

Even relying on the system for issuing data retention payouts exchanged for periodic proof-of-replicated-space (aka proof-of-spacetime[1]) is fraught because the consensus lead block proposer has discretion over which said proofs (and transactions) to include in the block.

What might work

A possible solution for periodic data storage retention payouts and transaction censorship in general which retains trustless automation so the user doesn’t have to run and maintain their own network daemon, might be a permissionless sharded⁹ smart contract system (let’s refer to it as layer 0 or layer 1′) where the approximate-epoch-based assemblage of tangled shards are periodically checkpointed by the singular block assembler such that the mutually-cross-hashed (i.e. cryptographically interwoven) tangle prevents discretionary, selective exclusion (i.e. 50+% attacking oligarchy would either need to accept all transactions or no transactions¹⁰) because of the emergent order from chaos as the automated users clients (via user-designated WoT triangulation and possibly aided when needed by manual user intervention with social communication) route away from uncooperative shards — a form of temporal weak subjectivity[4] but unlike proof-of-stake still orthogonal to and not injecting weak subjectivity into the overarching objective consensus layer of proof-of-work blocks. To divvy up storage retention payouts, the smart contract would need to be programmed in a special way to allow parallel execution across a plurality of shards, e.g. the set of replicated-space proofs are accumulated in parallel during one block period (i.e. tangentially note still have synchronized-time-independence, not devolving to some analogy proof-of-stake) and then paid out by the smart contract on the next block period.

Unlike for the layer 1 consensus which necessarily enables domination (either via the permissioned set of non-proof-of-work block proposers or the economics and game theory of proof-of-work hashrate mining) the natural oligarchy can’t grab operational control of every shard in my layer 0 conceptualization of sharding, because the users have a self-preservation incentive to route away from misbehaving shards and the permissionless thresholds for standing up a shard will be achievable by any minuscule minority.

This reveals a design I held but was unable to pursue since ~2016 for how to ameliorate the censorship of a 50+% (aka misnomered “51%”) attack on proof-of-work, although I am omitting some details in this descriptive, informal summary. Contrast this with Gavin Andresen’s typical fits of brilliance proposal that would afaics break Nakamoto proof-of-work’s Nash Equilibrium security model by offering a transaction spam vector to override the longest (highest mining difficulty) chain rule with an arbitrary forkathon.

Tangentially my conceptualization of shards can possibly ameliorate much of the aforementioned MEV problem¹¹ while further strengthening censorship resistance, by committing to hashes of transactions for ordering before revealing the transaction data. Shard operators could misbehave by employing the blame game to pretend that reveals weren’t received timely, enabling some limited form of reordering by omission.¹² Note my idea seems implausible to adapt to the normal conceptualization with a mempool model for block proposers which lacks a one-to-one mapping from transaction submission and block proposer/assembler (often aka validator) and thus which is giving rise to flashbots and permissioned mempool devolution.

A dubious alternative formulation is committing to the input of a VDF whose output completes the reveal (after ordering commitments are already complete), thus attempting to make omission an objectivity detectable malevolence instead of only weakly subjective. But the shard operator could attempt to pretend it received the transaction submission later than it did. The submitted transaction could specify a maximum delay of acceptance, though the shard operator could convert this to temporal weak subjectivity by lying about their timestamps. Vitalik referred to these as ‘timelock puzzles’ in 2015. His inane proposed solution of making a block invalid if the VDF execution backlog filled up with too many censored transactions belies any comprehension of the vital Schelling point and Nash equilibrium of Nakamoto proof-of-work provided by the economics and game theory of first-to-find, first-to-publish the block solution. Says who will declare a block invalid? And objectively provable by what mechanism that doesn’t involve the ambiguous blame game?🤦♂️Ditto the obvious flaws in his increasingly bizarre Rube Goldberg fantasies in the remainder of that linked section of his blog post. That cited blog seems out-of-character even for Vitalik, as although I know he prefers fanciful theory and circuitous rumination, he’s intelligent and rarely commits such an obvious gaffe.

Overall the more robust amelioration of MEV might involve programming smart contracts so they can batch execute in parallel across all shards, which provides additional censorship resistance in my hypothesized model and thus even the said reveals can be recorded by any shard not just the shard that recorded the prior hash commitment. Also the smart contract logic becomes more immune to many MEV strategies by aggregating all the transactions in block into a unified action such that reordering them within a block is pointless except via omission which becomes much less feasible if blocks are forced to include all shards. Although batching enables block reorg to alter the batch size of the slice of transactions from shards considered to be in a block. If all shards must communicate all their transactions to a slave (thus no threat to decentralization) centralized prover (e.g. the block proposer) for the smart contract, scaling is bounded (probably not by network bandwidth but) by non-parallelizable computation — but that’s the case anyway if a smart contract can only run on one shard. Yet some of the computation might be parallelizable on each shard (possibly even reducing communication bandwidth utilization) with the remainder centralized, thus plausibly increasing scaling.

Batching would also plausibly ameliorate the aforementioned (in the VDF discussion) hen-egg inability to employ proof-of-work randomness, by batching the transactions that thus don’t need to be validated before the block solution and its randomness is generated. Thus perhaps fixing all the major outstanding seemingly insoluble problems with smart contracts in the extant formulations.

⁹ Vitalik’s conceptualization of sharding as necessarily minimizing the amount of data each node has to store and process, detracts from understanding sharding more crucially as my stated censorship-resistance decentralization mechanism. If the users as SPV (i.e. light) client nodes can validate transaction activity efficiently with for example zk-STARKs, they need not care that much about how concentrated the processing power of sharded nodes as long as users have the permissionless option to create new shard nodes and switch from malevolent to correctly behaving shard nodes. Vitalik’s folly can easily be visualized as a Sybil attack on the unprovable identity of who is actually controlling the shard nodes — instead my idea focuses on measurable qualities.

¹⁰ Said block assembler could be proof-of-stake or proof-of-work, except that Vitalik is incorrect to imply that for the censorship case that punishing stake can’t be directed to injure the innocent and that there’s no viable, non-centralized means in proof-of-work for forking away from the attacking oligarchy without the vulnerable, nuclear option of changing the proof-of-work hash. Specifically I’m ostensibly the first person to realize that surreptitiously merged-mining (for either proof-of-stake or proof-of-work) in inconspicuous user transactions can be employed instead — not even a 100% attack could censor unless the attackers never want to allow any transactions from any sharding nodes. Also although proof-of-work has no advantage for discouraging empty or reduced transactions in blocks due to any incurred ongoing expense that must be funded by transaction fees because the network hashrate adjusts to the marginal miners’ profitability, proof-of-work miners (other than repurposeable mining hardware such as Monero’s CPU-only RandomX) turn their ASICs into worthless doorstops if they shutdown all transactions in an attack on the only significant blockchain to which their ASICs apply. Whereas stake is always conserved, even for slashing of double-spends (c.f. below), and especially as aforementioned true in the censorship case.

¹¹ Which also makes block reorgs pointless because block proposers can’t reorder the transactions in the shards, unless they operate or collude with a shard that lies about what all the nodes see on the peer-to-peer gossip network.¹²

¹² However, this malevolence would be visible to all nodes on the peer-to-peer gossip network. So my aforementioned, temporal weak subjectivity hypothesis is that client nodes would route away from such uncooperative shards.

Filecoin

Filecoin was redesigned as intended[6] from its original proof-of-work to a system analogous to proof-of-stake wherein the percentage of stake is instead the percentage of the active storage in the network.

Specifically it’s a trusted, permissioned, synchronized-wallclock-time-dependent (i.e. not trustless nor permissionless, c.f. aforementioned Implausible) probabilistic Byzantine fault tolerant (PBFT) consensus based on a timed-epoch, trusted, permissioned-membership BLS threshold signature, drand randomness beacon driving the dubious¹³ §4.18.53 Secret Leader Elections[12] under the control of the extant storage nodes via §2.4.4.6.3 The Power Table maintained by periodic (i.e. each timed epoch) proof-of-spacetime.[13]

Thus if an oligarchy is in control they can surreptitiously maintain control by adding as much storage as necessary when admitting new non-oligarchy storage, since no new storage could be added without the prerequisite oligarchy’s permission. Or any oligarchy could just choose to deny the entry of non-oligarchy storage, subject to detection by triangulation via social consensus.

Natural oligarchy

Death, taxes, and egregious concentration of the power-law distribution of wealth & fungible resources are universal, inexorably undefeated invariants. Thus don’t doubt likely existence of a 50+% oligarchy drawn as flies to honey to all consensus systems including democracy. Yet proof-of-work oligarchies are more impotent (either for short-term rented hashrate double-spend attacks or long-range censorship) and proof-of-work blockchains more resilient because:

ASIC sunk-costs—unlike storage or CPUs—can’t be repurposed.

Block assembler/miner downtime (e.g. government takedown) isn’t a liveness attack requiring a hard-fork to restart the blockchain. The only form of liveness attack is the mining difficulty non-readjustment bomb attack which doesn’t apply to Bitcoin anymore.

Absence of nothing-at-stake, cost-free-replayable Whac-A-Mole when attempting malfeasance such as double-spends, forks or sortition-transaction-membership-or-proofs censorship.

Vitalik quixotically argues that either the natural oligarchy doesn’t exist or that the community can fork away from malfeasance to bankrupt the oligarchy. Shouldn’t burn validator deposits for censorship because of the aforementioned blame game dilemma could injure the innocent validator. For example, an attacker could flood and overwhelm innocent validators with DoS denying them from proposing a block in their scheduled slot,¹³ and/or the oligarchy’s dominant validator monopoly could ignore block proposals from innocent validators pretending they never received them. Moreover, without with my aforementioned idea for ameliorating the selective censorship in a 50+% censorship-only (i.e. not double-spend) attack on proof-of-work, the oligarchy isn’t likely to pointlessly censor all transactions but just certain ones of high extortion value or (already) complying with OFAC censoring.¹⁴ There’s no objective consensus around which validator didn’t add a transaction from the MyPillow hombre Mike Lindell. Thus the oligarchy will retain their dominant stake and there is no provably objective means to identify what to fork away from, because users do not sign their transactions to only a specific thus blameable validator. Even slashing of validator deposits for double-spends can be ineffective if the profit from the attack is greater than the deposit.

Intrepid Vitalik’s naivete belies the fact that the ~80 - 99% are the power vacuum—and “nature abhors a vacuum and for vacuums of power it’s doubly true”—which are naturally harvested by the ~1 - 20% per the inviolable natural order of the power-law distribution of wealth and fungible resources. Any blockchain which burns ~80 - 99% of its stake (i.e. chops off its face, disembowels itself) to allow its ~1 - 20% economic minority to transact (i.e. to save its skin — the ~80 - 99% majority of thus nearly worthless human protoplasm) will isolate itself from anything economic¹⁵ (i.e. be worthless).

Thus not only would the oligarchy retain economic control on their fork, if there was some inane “social consensus” witch hunt attempt to blame the merely suspicious (but not objectively provable—only subjectively—culpable) validators. Worse yet, a coordinated, organized fork (analgous to democracy) is centralization and it evokes his weak subjectivity[4] in that (as Vitalik implicitly admitted) only those very well connected nodes (and it’s prohibitively costly for most users to run an Ethereum full node) that were online in real-time would be able to trust the fork. How do you convince users to trust an economic disaster? Not that any of this matters in practice anyway (except the OFAC threat is palpable), because a proof-of-stake oligarchy has more insidious, perhaps even undetectable means of extracting rents from the system, such as MEV extraction and surreptitiously biasing the randomness of smart contracts.

It’s not like the ~80 - 99% organically fork away and construct their fork out-of-chaos emergently via an accumulation of objective, independent, individual actions as is the case with my aforementioned idea for ameliorating the selective censorship in a 50+% censorship-only (i.e. not double-spend) attack on proof-of-work.

The “golden child of crypto” idealistic, admirable, lovable, human, whimsical, physically-awkward Vitalik (who btw I do not dislike nor disrespect, and admittedly is more erudite, even a faster learner, in math/cryptography than moi) ostensibly has it so backasswards that I predict he’ll be ’backsplaining in the rektd future. I’ll anecdotally attribute his folly to being an Aquarius.

In case he secretly doesn’t think decentralization or free market is the good future, then people buy into his [schtick of Jobsian-like, geek-cool-cultural reality distortion field] ideas due to their own short comings (and close to zero knowledge in tech) more than due to his being over insightful or anything along that line, and he is just continuing his crypto experiment while it can last. Another possible scenario is he is gullible to [the inviolable power-law distribution of] money and thus unable to get over the fact that decentralization and/or free market looks better in theories than in practice

The air signs (Gemini, Libra, and Aquarius) are communicative, sharp, and intellectual, but also prone to overindulging in fantasy and theory.

Do you seriously think that the distant constellations that used to be overhead thousands of years ago on your birthdate affect your personality?

I intuitively suspect there may be Lorenz Strange Attractor-like order in chaos in the form of cycles that may apply in this astrological model to some yet-to-be quantified/qualified and understood extent and mechanism. I have not investigated whether my anecdotal experience is confirmation bias driven apophenia.

Much more resilient that proof-of-work block proposers (aka miners) are not held hostage by knowing their stake balances or permissioned security deposits because they can do their job without persecution, given that any attempt to f*ck with them is a game of Whac-A-Mole as they can be stood down and up permissionlessly.

¹³ And single secret leader election will not work against the natural oligarchy despite §3’s claims because a correct form relies on public randomness (c.f. §1.2 and ‘Randomness Beacons’ in §2), which I explained doesn’t exist against the natural oligarcy in non-proof-of-work.

¹⁴ Justin Bons must not be aware that a 50+% oligarchy of validators can manipulate the aforementioned ʀᴀɴᴅᴀᴏ randomness beacon to deny any other validators a slot to propose blocks, although for some unknown reason Vitalik ostensibly doesn’t think so. Even if they improve it to mimic Dfinity’s beacon, it will only raise that to ⅔ and incur the ⅓ liveness tradeoff.

¹⁵ @Njaa doesn’t factor in that: a) the natural oligarchy (e.g. exchanges) could short-sell the tokens for profit, b) proof-of-stake validators don’t incur any block production cost (even the liquidity cost can be hypothetically reimbursed by validator re-use) nor any penalties if they release blocks timely either empty or if necessary stuffed with their fake transactions, c) attestation penalties can be averted by enforcing censorship via domination of the consensus, and d) unlike permissioned proof-of-stake validator hostages, proof-of-work miners can be physically moved, can be legally separated from block assembling by being contracted to produce hashes by the assembler in a favorable jurisdiction, and stood down/up independently in the permissionless protocol.

Non-proof-of-work (aka “consortium”) blockchains mine the FOMO

Flies to honey…

Proof-of-work in the form of Bitcoin hard money as (for the very first time in human history) a fixed supply reserve currency will eviscerate (socialism and) the insane asylum colloquially known as democracy.

Analogous to democracy, non-proof-of-work blockchains are entirely centralized deceptions, suitable for obfuscating that adoption is faked and that their protocols are not robust against bugs, self-doxxing attackers or state actors, also enabling their winner-take-all, parasitic, rent-seeking oligarchy to (often insidiously, even not noticed by most) extract the wealth from the system.

Ethereum ostensibly didn’t discard proof-of-work because of a threat of the liveness attack given Ethash (formerly Dagger-Hashimoto) couldn’t be mined with repurposed Bitcoin SHA256 ASICs and was of sufficient size to be fairly immune to rented hashrate attacks. Nor the magician’s deceptive mass hysteria rabbit hat excuse of the “point deer, make horse, 指鹿为马”, carbon-tax-fraud-motived, non-anthropogenic global warming/global cooling/climate change hoax+religion+idiocracy+parasitism (c.f. also).

“But in the bullshit department a

business[sales]man can’t hold a candle to a clergyman … you have to stand in awe of the all-time champion of false promises and false claims — religion.” — George Carlin

Proof-of-stake is still pointless

Probably not even because the inexorable (not ₿’s fixed supply) 𝙴𝚃𝙷 emission (aka minting) rate was highly dilutive (aka high debasement of the money supply) to fund the uncles for the 12 second block period. That emission (as admitted by Vitalik) eliminated in exchange for lower security. Worse yet, because of hypothetical validator re-use or even shorting the blockchain’s token while attacking, proof-of-stake can’t even accomplish attributing any time preference (i.e. liquidity or interest rate opportunity) cost to staking. Whereas, proof-of-work miners (other than repurposeable mining hardware such as Monero’s CPU-only RandomX) turn their ASICs into worthless doorstops if they attack the only significant blockchain to which their ASICs apply. Both proof-of-work and gold have mass but with proof-of-work the mass of non-repurposeable mining hardware is detached from the information of transaction.

But with the implicit (even if unwittingly by some naive parties such as Vitalik) objective so the oligarchy could maximize wealth extraction while necessarily taking more control so as to obviate any otherwise failure modes. Thus propping up the pretense illusion of decentralization while embarking on the even more a completely clusterf*cked, hodge-podge of experimental, likely myriad of incentives incompatibilities, “spaghetti against the wall” Rube Goldberg machine. Protocol forks increase complexity, thus decrease security and decentralization — be wary of heisenbugs in the zeno tarpits. Vitalik understands this, or did Eve hand him the temptation?¹⁶

“Your trust model collapses to … its weakest link [off-chain oracles, e.g. Chainlink]” — Charles Hoskinson

Or even winner-take-all resolution of dueling oligarchies. Given that Ethash miners were obviously power-law distributed, then some huge mining farms and large pools were receiving egregiously profitable 𝙴𝚃𝙷 gas transaction fees priced as if every block had to be recomputed (aka validated) by a huge number independent, full nodes (miners) observing the network when in fact the computation was likely highly centralized and efficient. Now consequently the need for smart contracts to pay Chainlink fees for the less secure, permissioned, trusted illusion of their “verified randomness function” (VRF).

“It’s a big club and you ain’t in it … The game is rigged folks, yet nobody seems to notice, nobody seems to care” — George Carlin

Which is the retort to those snowflakes who blissfully balk at such suggestions with, “but then why haven’t we seen these effects and massive failures.” Well we have with numerous examples, some I aforelinked, but nevertheless the clusterf*ckd Rube Goldberg designs[self-flaggelation(1) masochism]—ironically intrepidly marketed as quixotic utopias—are the pretext and technological enablers for insuring the centralized control necessary to maintain illusion of functionality and widespread adoption.

Ditto Filecoin.

¹⁶ As intelligent as he is, how can Vitalik claim that the warmonger, Weberian power enabled by being able to print digital digits of fiat out-of-thin-air by the $trillions to fund the government’s sovereign bonds is only the small seigniorage of printing physical currency?🤦♂️🤡 That’s as bonkers and disconnected from reality as his apparent, erroneous belief that Putin is the problem (with human rights violations) in Ukraine.

The US Navy has been deployed to the Mediterranean Sea. Are we sending extremely advanced technology to defeat a few hundred barely trained ground soldiers? The US sent two carriers to an enemy that has no carriers or aircrafts aside from some paragliders who have managed to bypass the Iron Dome. Again, Palestine does not have a military – at all. — Martin Armstrong

…more to come…still editing this…

[1] Tal Moran and Ilan Orlov. Simple Proofs of Space-Time and Rational Proofs of Storage. Cryptology ePrint Archive, Paper 2016/035, 2016. 𝚑𝚝𝚝𝚙𝚜://𝚎𝚙𝚛𝚒𝚗𝚝.𝚒𝚊𝚌𝚛.𝚘𝚛𝚐/𝟸0𝟷𝟾/𝟽0𝟸. (Note §1 Introduction has inane criticisms of proof-of-work.)

[2] BitFury Group. Proof of Stake versus Proof of Work. 2015. 𝚑𝚝𝚝𝚙𝚜://𝚋𝚒𝚝𝚏𝚞𝚛𝚢.𝚌𝚘𝚖/𝚌𝚘𝚗𝚝𝚎𝚗𝚝/𝚍𝚘𝚠𝚗𝚕𝚘𝚊𝚍𝚜/𝚙𝚘𝚜-𝚟𝚜-𝚙𝚘𝚠-𝟷.0.𝟸.𝚙𝚍𝚏. (Note last paragraph of §4.2 Hybrid PoW / PoS Consensus describes an insecure system because oligarchic proof-of-stake can refuse to include proof-of-work solutions thus bankrupting proof-of-work miners who don’t pay kickbacks.)

[3] Andrew Poelstra. On Stake and Consensus. 2015. 𝚑𝚝𝚝𝚙𝚜://𝚍𝚘𝚠𝚗𝚕𝚘𝚊𝚍.𝚠𝚙𝚜𝚘𝚏𝚝𝚠𝚊𝚛𝚎.𝚗𝚎𝚝/𝚋𝚒𝚝𝚌𝚘𝚒𝚗/𝚙𝚘𝚜.𝚙𝚍𝚏.

[4] Vitalik Buterin. Proof of Stake: How I Learned to Love Weak Subjectivity. Ethereum Foundation blog, 2014. 𝚑𝚝𝚝𝚙𝚜://𝚋𝚕𝚘𝚐.𝚎𝚝𝚑𝚎𝚛𝚎𝚞𝚖.𝚘𝚛𝚐/𝟸0𝟷𝟺/𝟷𝟷/𝟸𝟻/𝚙𝚛𝚘𝚘𝚏-𝚜𝚝𝚊𝚔𝚎-𝚕𝚎𝚊𝚛𝚗𝚎𝚍-𝚕𝚘𝚟𝚎-𝚠𝚎𝚊𝚔-𝚜𝚞𝚋𝚓𝚎𝚌𝚝𝚒𝚟𝚒𝚝𝚢.

[5] Vicent Sus. Proof-of-Stake Is a Defective Mechanism. Cryptology ePrint Archive, Paper 2022/409, 2022. 𝚑𝚝𝚝𝚙𝚜://𝚎𝚙𝚛𝚒𝚗𝚝.𝚒𝚊𝚌𝚛.𝚘𝚛𝚐/𝟸0𝟸𝟸/𝟺0𝟿.

[6] 1e96a1b27a6cb85df68d728cf3695b0c46dbd44d. Filecoin: A Cryptocurrency Operated File Storage Network. filecoin.io, 2014. 𝚑𝚝𝚝𝚙𝚜://𝚏𝚒𝚕𝚎𝚌𝚘𝚒𝚗.𝚒𝚘/𝚏𝚒𝚕𝚎𝚌𝚘𝚒𝚗-𝚓𝚞𝚕-𝟸0𝟷𝟺.𝚙𝚍𝚏.

[7] Emperor. Verifiable Delay Functions. Mirror (crypto) blog, 2022. 𝚑𝚝𝚝𝚙𝚜://𝚌𝚛𝚢𝚙𝚝𝚘.𝚖𝚒𝚛𝚛𝚘𝚛.𝚡𝚢𝚣/𝚎𝚘𝙷𝚆𝙰_𝚖𝚙𝚄𝚔𝙹𝚄𝙺𝟺𝟿𝙴𝚞𝚓𝚍𝚍𝟾𝚄𝚚-𝚓𝙵𝙳𝚋𝟷𝙼𝟾𝙳𝚑𝚁𝟻𝚚𝚎𝙰𝚟𝚒𝚝𝚓𝙰.

[8] Ben Fisch. Tight Proofs of Space and Replication. Cryptology ePrint Archive, Paper 2018/702, 2018. 𝚑𝚝𝚝𝚙𝚜://𝚎𝚙𝚛𝚒𝚗𝚝.𝚒𝚊𝚌𝚛.𝚘𝚛𝚐/𝟸0𝟷𝟾/𝟽0𝟸.

[9] Peng Huang, Chuanxiong Guo, Lidong Zhou, Jacob R. Lorch, Yingnong Dang, Murali Chintalapati, Randolph Yao. Gray Failure: The Achilles’ Heel of Cloud-Scale Systems. Microsoft Research, 2017. 𝚑𝚝𝚝𝚙𝚜://𝚠𝚠𝚠.𝚖𝚒𝚌𝚛𝚘𝚜𝚘𝚏𝚝.𝚌𝚘𝚖/𝚎𝚗-𝚞𝚜/𝚛𝚎𝚜𝚎𝚊𝚛𝚌𝚑/𝚠𝚙-𝚌𝚘𝚗𝚝𝚎𝚗𝚝/𝚞𝚙𝚕𝚘𝚊𝚍𝚜/𝟸0𝟷𝟽/0𝟼/𝚙𝚊𝚙𝚎𝚛-𝟷.𝚙𝚍𝚏.

[10] Sam Toueg, Vassos Hadzilacos. Asynchronous Systems with Failure Detectors: A Practical Model for Fault-Tolerant Distributed Computing. Unifying Theory and Practice in Distributed Systems, September 1994, pg 10. 𝚑𝚝𝚝𝚙𝚜://𝚍𝚛𝚘𝚙𝚜.𝚍𝚊𝚐𝚜𝚝𝚞𝚑𝚕.𝚍𝚎/𝚘𝚙𝚞𝚜/𝚟𝚘𝚕𝚕𝚝𝚎𝚡𝚝𝚎/𝟸0𝟸𝟷/𝟷𝟺𝟿𝟾𝟺/𝚙𝚍𝚏/𝙳𝚊𝚐𝚂𝚎𝚖𝚁𝚎𝚙-𝟿𝟼.𝚙𝚍𝚏#𝚙𝚊𝚐𝚎=𝟷0.

[11] Benedikt Bünz, Steven Goldfeder, Joseph Bonneau. Proofs-of-delay and randomness beacons in Ethereum. IEEE S&B Workshop, 2017. 𝚑𝚝𝚝𝚙𝚜://𝚓𝚋𝚘𝚗𝚗𝚎𝚊𝚞.𝚌𝚘𝚖/𝚍𝚘𝚌/𝙱𝙶𝙱𝟷𝟽-𝙸𝙴𝙴𝙴𝚂𝙱-𝚙𝚛𝚘𝚘𝚏_𝚘𝚏_𝚍𝚎𝚕𝚊𝚢_𝚎𝚝𝚑𝚎𝚛𝚎𝚞𝚖.𝚙𝚍𝚏. Author’s webpage for slides, talk, code: 𝚑𝚝𝚝𝚙𝚜://𝚌𝚛𝚢𝚙𝚝𝚘.𝚜𝚝𝚊𝚗𝚏𝚘𝚛𝚍.𝚎𝚍𝚞/~𝚋𝚞𝚎𝚗𝚣/𝚙𝚞𝚋𝚕𝚒𝚌𝚊𝚝𝚒𝚘𝚗𝚜/.

[12] Filecoin spec. 𝚑𝚝𝚝𝚙𝚜://𝚜𝚙𝚎𝚌.𝚏𝚒𝚕𝚎𝚌𝚘𝚒𝚗.𝚒𝚘/.

[13] Filecoin docs. 𝚑𝚝𝚝𝚙𝚜://𝚍𝚘𝚌𝚜.𝚏𝚒𝚕𝚎𝚌𝚘𝚒𝚗.𝚒𝚘/.

[14] Joseph Bonneau, Jeremy Clark, and Steven Goldfeder. On Bitcoin as a public randomness source. Cryptology ePrint Archive, Paper 2015/1015, 2022. 𝚑𝚝𝚝𝚙𝚜://𝚎𝚙𝚛𝚒𝚗𝚝.𝚒𝚊𝚌𝚛.𝚘𝚛𝚐/𝟸0𝟷𝟻/𝟷0𝟷𝟻.

Was I prescient?

My (censored) comment on the CAP Theorem (https://en.wikipedia.org/wiki/CAP_theorem) distributed systems limitation:

https://www.goland.org/blockchain_and_cap/comment-page-1/#comment-2681576

https://www.goland.org/blockchain_and_cap/#comment-2681576

“Bitcoin is always and forever only probabilistically consistent, thus never CP [but always AP in the CAP]. This blog is incorrect, although it does illustrate a point about trading off availability latency for [a naively computed? c.f. below…] probability of consistency. Unfortunately the blog does not even mention probability.”

Bitcoin’s partition tolerance doesn’t mean partitioning is nice. Imagine aliens mining Bitcoin on Mars exploiting some technology for nearly free energy, at a million times the hash rate of on Earth.

Later when they broadcast their chain to Earth, all earthly Bitcoin wealth will be erased and fleeced (https://paulkernfeld.com/2016/01/15/bitcoin-cap-theorem.html#big-partitions).[1] Thus Bitcoin is only ever probabilistically consistent.[7] Bitcoin wealth is not absolute.

[1] For n00bs, the Nakamoto consensus protocol rule is that the partition (i.e. fork) with the longest chain (actually to be more precise the highest cumulative proof-of-work difficulty) always wins and the other fork is orphaned.

Competing forks are resolved probabilistically w\high probability (by orphaning all but one) typically within up to ~6 blocks; but not if network partitioned such that the forks aren’t aware of the other (until much later).

This is an example of lying w\Probability & Statistics (https://en.wikipedia.org/wiki/Lies,_damned_lies,_and_statistics)— why I emphasize the true independent random variable is often the unaccounted (even unknowable) factor that can lead to such egregiously incorrect computations. The long-tail distribution (aka Black Swan event) is essentially failure of assumptions about the independent variable due to appearance of a Black Swan outlier. Accumulated inertia of such assumptions is the antithesis of Taleb’s antifragility.

[Technobabble minutiae: probability of finality (https://math.stackexchange.com/questions/2356763/the-probability-behind-bitcoin) (i.e. consistency, c.f. also (https://bitcoil.co.il/Doublespend.pdf#page=7)) in Nakamoto consensus is normally calculated (https://ems.press/content/serial-article-files/11512#page=2) (actually approximated (https://arxiv.org/pdf/1801.07447#section.5)) as a (continuous (https://archive.ph/https://suhailsaqan.medium.com/explaining-bitcoin-mining-as-a-poisson-distribution-92b2481fb80f)) Poisson process with an exponential distribution of time between blocks (https://bitcoin.stackexchange.com/questions/25293/probablity-distribution-of-mining) and the (discrete) Poisson distribution (https://en.wikipedia.org/wiki/Poisson_distribution) for blocks per period, wherein the average constant rate of events is given by the average time to find a proof-of-work hash solution given the current level of mining difficulty and the network’s cumulative hash rate.]

But the Poisson process (i.e. a probabilistic) model is unaware of a partition running (e.g. on Mars by aliens) at a different hashrate. The “independent” random variable in the Poisson model is actually dependent on the probability distribution of partition events (i.e. an orthogonal probabilistic model)— something you will never see mentioned in any mathematical discussion of Bitcoin. Heck this isn’t merely theoretical nor so far-fetched given the radio signal propagation delay between Mars and Earth ranges from 4.3 to 21 minutes, making Bitcoin entirely unsuitable for an interstellar mined blockchain.

[7] In an asynchronous network,[8] footnote #1 in Andrew Poelstra’s On Stake and Consensus (https://download.wpsoftware.net/bitcoin/pos.pdf) correctly cited that absolute termination (i.e. final consistency) is impossible unless **dysfunctionally partition intolerant** (http://web.archive.org/web/20130312052529/http://blog.cloudera.com/blog/2010/04/cap-confusion-problems-with-partition-tolerance/). Nakamoto consensus sidesteps that FLP85 impossibility theorem by (its transactions) being only probabilistically (and thus never absolutely) final.

Proof-of-stake requires a synchronous network assumption enabling “dysfunctionally partition intolerant” deterministic finality (https://anonymint1.substack.com/p/decentralized-data-storage-and-delivery/comment/56466346) but forsaking on-chain objectivity (https://blog.ethereum.org/2014/11/25/proof-stake-learned-love-weak-subjectivity) (c.f. also (https://www.reddit.com/r/CryptoTechnology/comments/od9ves/proof_of_stake_how_i_learned_to_love_weak/) and §4.3 “Long-Range” vs “Short-Range” Attacks (https://download.wpsoftware.net/bitcoin/pos.pdf#subsection.4.3)) inter alia.[9]

[8] Asynchronous networks have no max message arrival latency. Actually Bitcoin’s 10 min block period is a synchrony assumption, but the (propagation delay) network diameter is so much smaller; it’s for practical purposes almost (https://tik-old.ee.ethz.ch/file//49318d3f56c1d525aabf7fda78b23fc0/P2P2013_041.pdf#page=8) equivalent to an asynchronous assumption. C.f. 1 (https://www.reddit.com/r/btc/comments/gxng23/comment/ft97xgd/), 2 (https://www.reddit.com/r/btc/comments/gxng23/comment/ft3qgsi/) and Vitalik’s summary w\less exact equation (https://blog.ethereum.org/2014/07/11/toward-a-12-second-block-time#stales-efficiency-and-centralization).

[9] Specific (https://web.stanford.edu/class/ee374/lec_notes/lec15.pdf#subsection.1.4) (dubiously (https://anonymint1.substack.com/p/decentralized-data-storage-and-delivery#%C2%A7liveness-attack)-ameliorated (https://web.stanford.edu/class/archive/ee/ee374/ee374.1206/downloads/l18_notes.pdf)) attacks against (https://bitfury.com/content/downloads/pos-vs-pow-1.0.2.pdf#page=16) proof-of-stake consensus distract focus from insoluble deficiencies of lacks cost-of-production (https://steemit.com/bitcoin/@anonymint/secrets-of-bitcoin-s-dystopian-valuation-model) thus no monetary value (https://archive.ph/https://www.zerohedge.com/news/2019-05-25/drop-gold-myths-naturalist-exposition-golds-manifest-superiority-bitcoin-money), inferior liveness (https://anonymint1.substack.com/p/decentralized-data-storage-and-delivery#%C2%A7liveness-attack) (rubber-hose (https://www.google.com/search?q=rubber-hose+cryptography) the whales![10]), can’t distribute decentralized (https://bitfury.com/content/downloads/pos-vs-pow-1.0.2.pdf#subsection.3.2) and cost-free forks (https://bitfury.com/content/downloads/pos-vs-pow-1.0.2.pdf#subsection.3.1) (lack opportunity cost bcz) are equally (in)“secure.”

I wrote (https://anonymint1.substack.com/p/decentralized-data-storage-and-delivery#%C2%A7what-might-work):

“stake is always conserved”

[10] https://www.reddit.com/r/programming/comments/7ph6m/rubberhose_cryptanalysis_russian_name/ (Russian name: "Thermorectal cryptanalysis" 🤣)

===========

Hypothetically even brute-force, nearly cost-free, long-range attacks (analogous to a withheld/uncontactable partition) might someday be viable on Bitcoin employing hypothetically[2] a quantum computer employing Grover’s algorithm for preimage search[3] might be able to orphan the entire Bitcoin history since inception, c.f. *§5 Resistance to quantum computations* of Iota’s Tangle whitepaper[4] although quantum computers are no where near practical yet[3][5] and Daniel J. Bernstein showed that some (at least one) quantum algorithms (e.g. hash collisions) will **never** be more cost-efficient with a quantum computer.[3][6]

Note this posited attack presumes the profit/motivation from/for orphaning the non-quantum history subsumes appending quantum computing mined blocks to said history.

[2] https://www.scottaaronson.com/democritus/lec14.html

[3] https://crypto.stackexchange.com/questions/63236/what-benefits-quantum-offer-over-classical-parallelism

[4] https://assets.ctfassets.net/r1dr6vzfxhev/2t4uxvsIqk0EUau6g2sw0g/45eae33637ca92f85dd9f4a3a218e1ec/iota1_4_3.pdf#page=26

[5] https://viterbischool.usc.edu/news/2023/06/quantum-computers-are-better-at-guessing-new-study-demonstrates/

[6] https://cr.yp.to/papers.html#collisioncost (https://cr.yp.to/hash/collisioncost-20090823.pdf)

Reacting to:

https://0xfoobar.substack.com/p/ethereum-proof-of-stake#%C2%A7ethereum-implementation

“Ethereum’s PoS implementation has been teased for the better half of a decade now, but with the beacon chain running for 18 months straight and successful live merges on multiple testnets the initial implementation is largely finalized.”

Dubious whether bugs and corner cases have been exorcised given the high likelihood of the natural oligarchy enforcing a preferred order:

https://anonymint1.substack.com/p/decentralized-data-storage-and-delivery/comment/56417630

“A supermajority (2/3rds) of validators is required to finalize a block, in case of a 50-50 network partition blocks would stop being finalized and attestation rewards would stop. Non-participating validators would slowly leak stake through the inactivity leak until online validators once again had a supermajority. This is the ‘self-healing’ mechanism that allows both safety and liveness.”

THIS ENTIRELY LACKS THE PARTITION TOLERANCE OF THE CAP THEOREM.[1] This design bleeds out the partitioned stake from every partition, so they are permanently forked off from each other unlike for proof-of-work where all but one of the partitions will eventually be orphaned when the network returns to normal.

[1] https://anonymint1.substack.com/p/decentralized-data-storage-and-delivery/comment/57176124

Also this presents either an inferior liveness situation to proof-of-work or means for the natural oligarchy (probably insidiously, surreptitiously) extract value:

https://anonymint1.substack.com/p/decentralized-data-storage-and-delivery/comment/56417630

“Equivocation is punished by slashing up to the validator’s entire stake, so the attacker must commit to destroying at least one-third of all staked ETH. The cost to reorg a finalized block is several billion dollars, even at today’s depressed prices.”

Not that they have any incentive to do so when the natural oligarchy (w\majority stake) can more easily and effectively extract value insidiously instead. There are theoretical long-range attacks for said natural oligarchy in proof-of-stake against lock-up deposit penalty mechanisms, but this simply isn’t the preferred way that proof-of-stake systems mine the FOMO n00bs instead by surreptitious value extraction.

“One key difference is that the honest validators would have to explicitly band together to recognize one another’s attestations and override the fork choice rule, but other than that they can form their own child chain and the malicious supermajority would slowly bleed stake out of the validator set until the honest subminority has once again regained a supermajority.”

The majority stake chain can censor blocks and attestations! The bleeding based on inactivity of attestations is a double-edged sword that can be (even insidiously) turned against the subminority by the malevolent supermajority. The “he said, she said” dilemma of weak subjectivity[2] applies if instead the honest minority band together to create their own fork to bleed out what they claim is the dishonest majority.

[2] https://anonymint1.substack.com/p/decentralized-data-storage-and-delivery/comment/56420675

https://anonymint1.substack.com/p/decentralized-data-storage-and-delivery/comment/56417630

In addition to some additional points I made at the aforelinked, Lyn Alden also refuted (https://www.youtube.com/watch?v=1m12zgJ42dI) the following linked section. Essentially she argued against the highest economies-of-scale have the most proof-of-work profit, because she alleges there’s too much risk (e.g. jurisdictional) and micromanaged opportunity-cost (e.g. making deals in situations where byproduct energy or natural sources are normally discarded/unharvested).

https://0xfoobar.substack.com/p/ethereum-proof-of-stake#%C2%A7pos-rich-get-richer-pow-egalitarian